adampj

Member

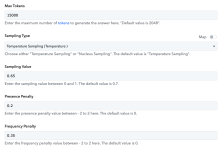

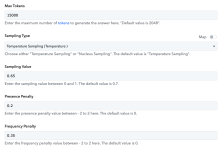

I’m running into another parameter issue, this time with gpt-4.1-2025-04-14 (or GPT-4.1) when using the ChatGPT (Structured AI Output) action in Pabbly. I set the Max Tokens field to 15000 (screenshots attached), but the responses keep getting capped at 2048 tokens, which seems to be the default. The debug output confirms that Usage Completion Tokens never exceeds 2048, even though the model supports much higher limits.

This suggests that the Pabbly integration isn’t passing the max_tokens parameter correctly (or at all) to the API, and is instead defaulting to 2048. It looks like the same underlying issue as with GPT-5 — the field labeled “Max Tokens” is either ignored or mapped incorrectly.

Can Pabbly confirm whether max_tokens is supported in the current integration? And if not, is there a workaround to request completions above 2048 tokens (e.g., custom API call, mapping override, or update to the connector)?

Screenshots:

This suggests that the Pabbly integration isn’t passing the max_tokens parameter correctly (or at all) to the API, and is instead defaulting to 2048. It looks like the same underlying issue as with GPT-5 — the field labeled “Max Tokens” is either ignored or mapped incorrectly.

Can Pabbly confirm whether max_tokens is supported in the current integration? And if not, is there a workaround to request completions above 2048 tokens (e.g., custom API call, mapping override, or update to the connector)?

Screenshots:

Last edited: